NAPLAN, numeracy and nonsense

by Burkard Polster and Marty Ross

The Age, 13 May 2013

It’s time for a chat about NAPLAN. To which suggestion comes back the cry “Dear lord, please, let’s not!”

That’s us: that’s your Maths Masters crying out, desperately wishing to avoid this discussion. But it has to be had. This week a zillion Aussie schoolkids will sit down for their NAPLAN testing and the question is why?

Yes, there has been no shortage of painful promotion. The Federal Minister for Early Childhood and Youth eagerly spruiks on NAPLAN’s behalf. ACARA, the government body responsible for preparing and analysing the NAPLAN tests, indicates two supposed benefits of the tests: first, as a guide for schools to make improvements; second, as a mechanism of public accountability.

We and others are not convinced. Ignoring the issue of the huge resources employed, the question of obtaining value for time and money, we believe that the accountability aspect of NAPLAN, the naming and effective shaming on the myschool website, is needless and nasty.

However, that is not the debate we wish to re-have. Even accepting as possible the purported applications, there are aspects to NAPLAN that appear to have received almost no proper public discussion: what exactly is being tested, who evaluates the tests and who evaluates the testers?

We last wrote on NAPLAN two years ago. Our central complaint was that the numeracy tests were just that: they were tests of numeracy, a poor and poorly defined substitute for mathematics. We wrote about some glaring gaps in what was tested, the overwhelming focus on (often contrived) applications, the unbelievable decision to permit calculators in the years 7 and 9 tests, and we poked fun at some specific, silly questions.

At the time we thought we’d said enough, that that column would be our last word on NAPLAN. Then, foolishly, we decided to take a peek at the 2012 numeracy tests. We were astonished to discover how difficult it was to take that peek.

ACARA has made it extraordinarily difficult to obtain past NAPLAN tests or any helpful analysis of the tests. Yes, there are “practice tests” and yes, each year ACARA publishes a voluminous report on the outcomes of the tests. But not the tests themselves, nor how students on average performed on specific questions from those tests.

Teachers, if they try hard enough, can usually obtain this information. However, little Johnny Public and his mother have effectively no hope. ACARA has an application process but the process and the assistance offered appear to have been modeled on the Yes Minister Manual for Public Service. (We have applied for some data and at some point, when it’s done, we hope to write about the fun of it all.)

The message, intended or otherwise (and we doubt the “otherwise”), is that the tests should be accepted as a gift from the gods. Unfortunately, at times the gods appear to be crazy.

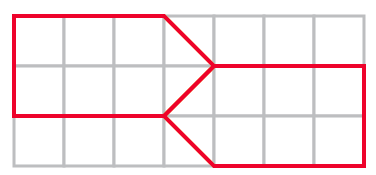

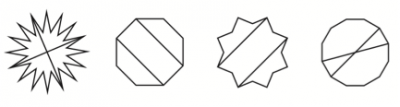

Consider the following question, which appeared on the year 7 and year 9 numeracy tests in 2012:

The question asked was which of the four figures above “looks identical” after a quarter turn? The grumpy and reasonable answer from one of our colleagues was “all of them”. Still, though the wording is clumsily vague, it’s pretty clear what the question is asking, and about 70 per cent of students nationwide correctly chose the first figure.

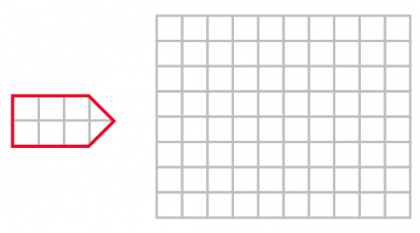

However, consider the following question, which appeared on the year 3 and year 5 numeracy tests in 2012:

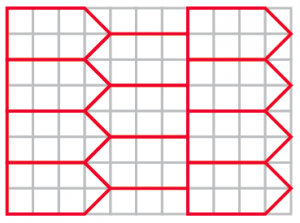

The students were presented an arrow-shaped “tag”, and the problem was to determine how many tags that “look like this” could be cut out from the pictured sheet of cardboard?

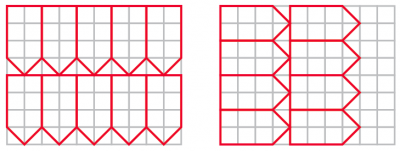

About 20 per cent of year 3 students and 40 per cent of year 5 students gave the intended answer of 10 tags, presumably arranging the tags similar to the picture on the left below.

Unfortunately the tag question is ambiguous. If “look like this” has the same meaning as “looks identical” then the tags would presumably have to be oriented the same as the sample tag, and then a maximum of eight tags could be cut from the cardboard (above, right). Indeed, a significant percentage of students gave eight as their answer.

There’s another, larger issue with the tag question. We assume that the intended approach is to enlarge each tag to a 2 x 4 rectangle and then see how many of those rectangles can be cut out of the cardboard. However, that approach can lead to an incorrect answer.

Imagine the dimensions of the cardboard are 8 x 11, rather than 8 x 10 as given in the question. With the approach described the extra column is of no help, and we can still only fit in 10 tags. However, if we interlace the tags as pictured below then 11 tags will fit.

Now, interlacing does not actually improve the answer for the 8 x 10 grid given in the test question, but of course we don’t know that until we try it. Interlacing is an approach that must be considered for a thorough treatment of the question.

The tag question is archetypally bad. The more you understand the intrinsic difficulty of the question the less likely you are to arrive at the correct answer.

It is not our intention to nitpick and we don’t want to make too much out of the above questions. We know how difficult it is to write clear and unambiguous questions, and many of the questions on the 2012 tests are well written and the students’ results are genuinely, depressingly, informative. We are also aware that ACARA has its own evaluation procedures and that each year they attempt to improve on the last.

Nonetheless, there is a genuine and general issue. The above questions may be more clearly problematic but they are by no means uniquely so. There are many questions on the 2012 numeracy tests on which the students performed poorly, and it is often difficult to be sure why. It may be that the students have struggled with basic arithmetic, or it may be that their general reading comprehension is poor.

However, for at least some of the questions, we suspect the major problem is simply the students' failure to cope with the contrived context and contrived wording of the question. And it must be noted: if the NAPLAN tests consisted of fewer context-based questions and included more straight tests of arithmetic skills, the problem of poorly worded questions would be much less of an issue.

That brings us to the overarching issue: accountability. Not that we expect ACARA, or anyone, to accept us as judges of the NAPLAN tests, and indeed your maths masters don't even agree: one of us considers the NAPLAN tests to be flawed but to serve a genuine function; the other believes that the tests are so bad that they're hilarious.

But ACARA is, or at least should be, accountable to the public. When less than 5 per cent of students nationally get a test question correct, the public has a right to know that. They have a right to know the question and they have a right to know how the results from that question are being interpreted. They have a right to question whether the results are a reasonable outcome of subtle test design, an indication of some systemic issue with students' mathematical skills or knowledge, or simply an example of a poorly written question. Currently, ACARA doesn't lift a finger to enable any of this to occur.

ACARA is proud to promote the value of accountability when it is teachers and schools that bear the burden and the scrutiny. Whether or not that is appropriate sauce for the goose, it is long past time there was arranged some similar sauce for the gander.

Burkard Polster teaches mathematics at Monash and is the university's resident mathemagician, mathematical juggler, origami expert, bubble-master, shoelace charmer, and Count von Count impersonator.

Marty Ross is a mathematical nomad. His hobby is smashing calculators with a hammer.

Copyright 2004-∞ ![]() All rights reserved.

All rights reserved.